Hello there Creative People!

Lately, Midjourney’s team has been blessing us with some super useful new features. And today we are going to explore one of them: Inpainting or as Midjourney calls it Vary Region.

This feature gives us a whole new level of control over our generations. And we can now get the details of our images perfected as never before.

In this article, we will:

Learn how to use the Vary Region feature in Midjourney AI

Look at some examples of how the feature could be used

Explore some ideas for potential use cases of this feature

Inpainting

This is a feature that allows us to change parts of our generated images while leaving the rest of the image intact. We no longer have to struggle to get the prompt just right and generate everything perfectly. We now have the option to change parts of the images later.

We can now:

fix the parts of the image we didn't get right with the initial generation

Customize our favorite generations

Swap Backgrounds

Swap Characters

And more

How to use the feature

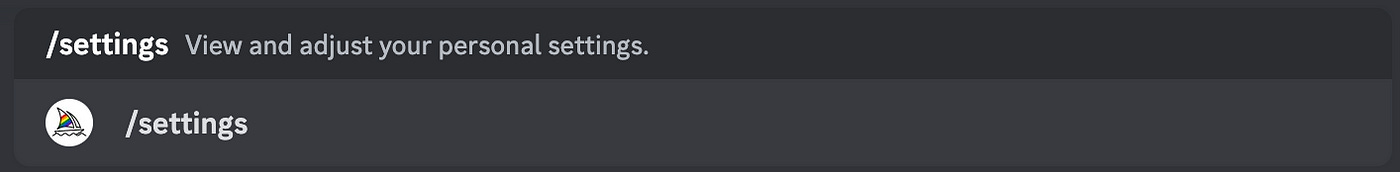

First, use the /settings command to make sure the “Remix mode” is enabled. This will enable you to alter the prompt when inpainting a region of the image.

The "Remix mode" button should be green when it is enabled.

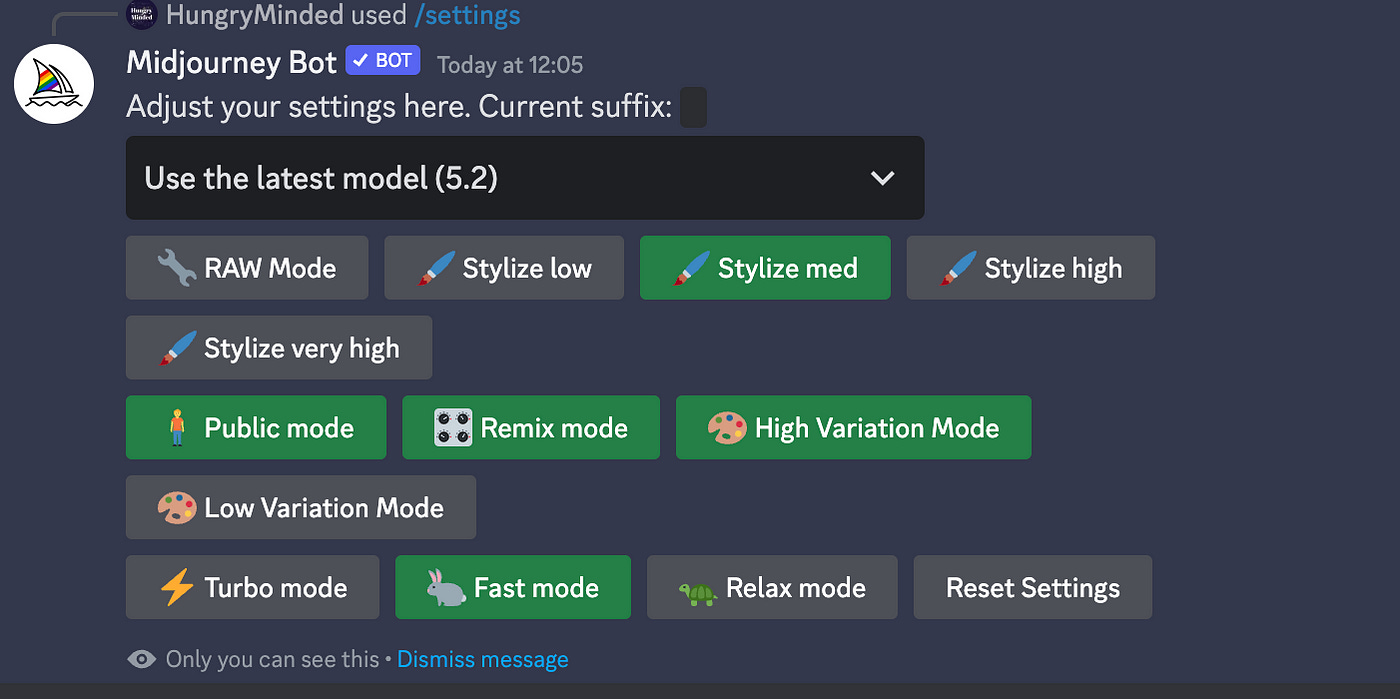

Now you need to upscale any of your generations. As an example, let’s use this image with a simple prompt:

Prompt: A robot with a monocle

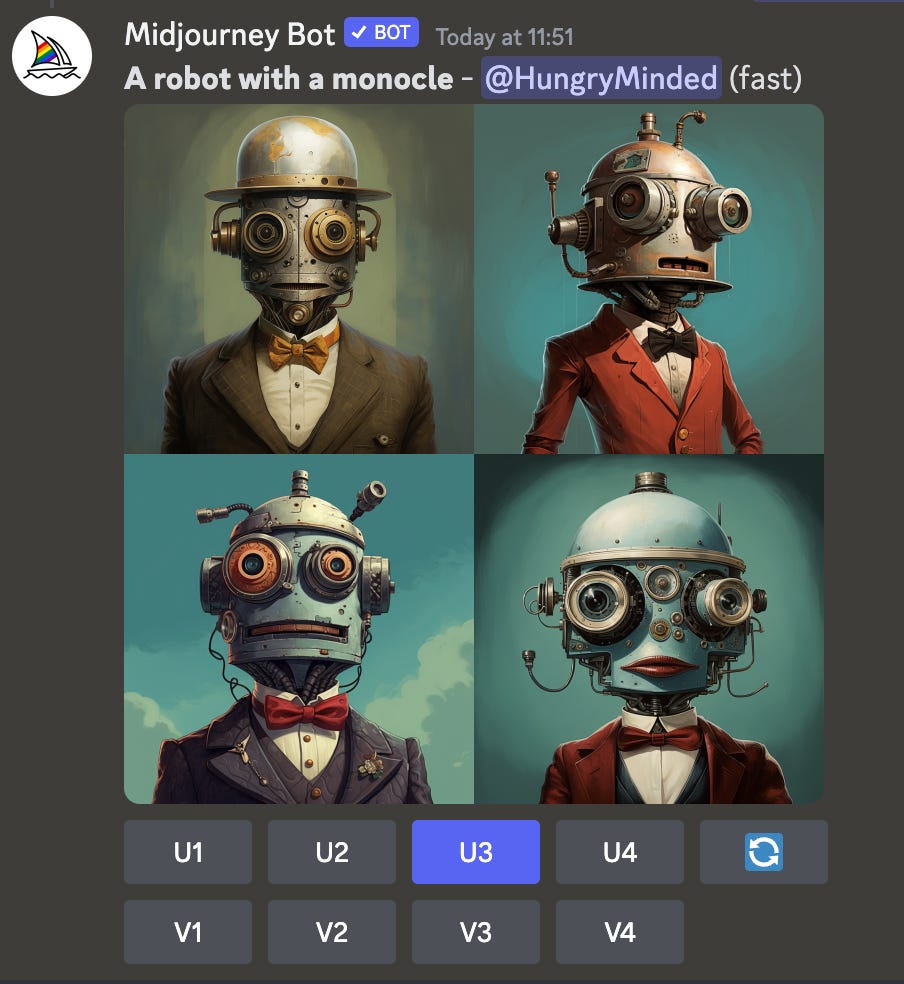

And upscale the image we like the most. In my case, it’s the third image, so I pressed the “U3” button to upscale it.

And now we can begin inpainting.

To do so we need to press the “Vary (Region)” button.

(“Vary (Strong)” or “Vary (Subtle)” buttons will change the whole image.)

But the “Vary (Region)” button will allow you to select the area of the image you want to work with.

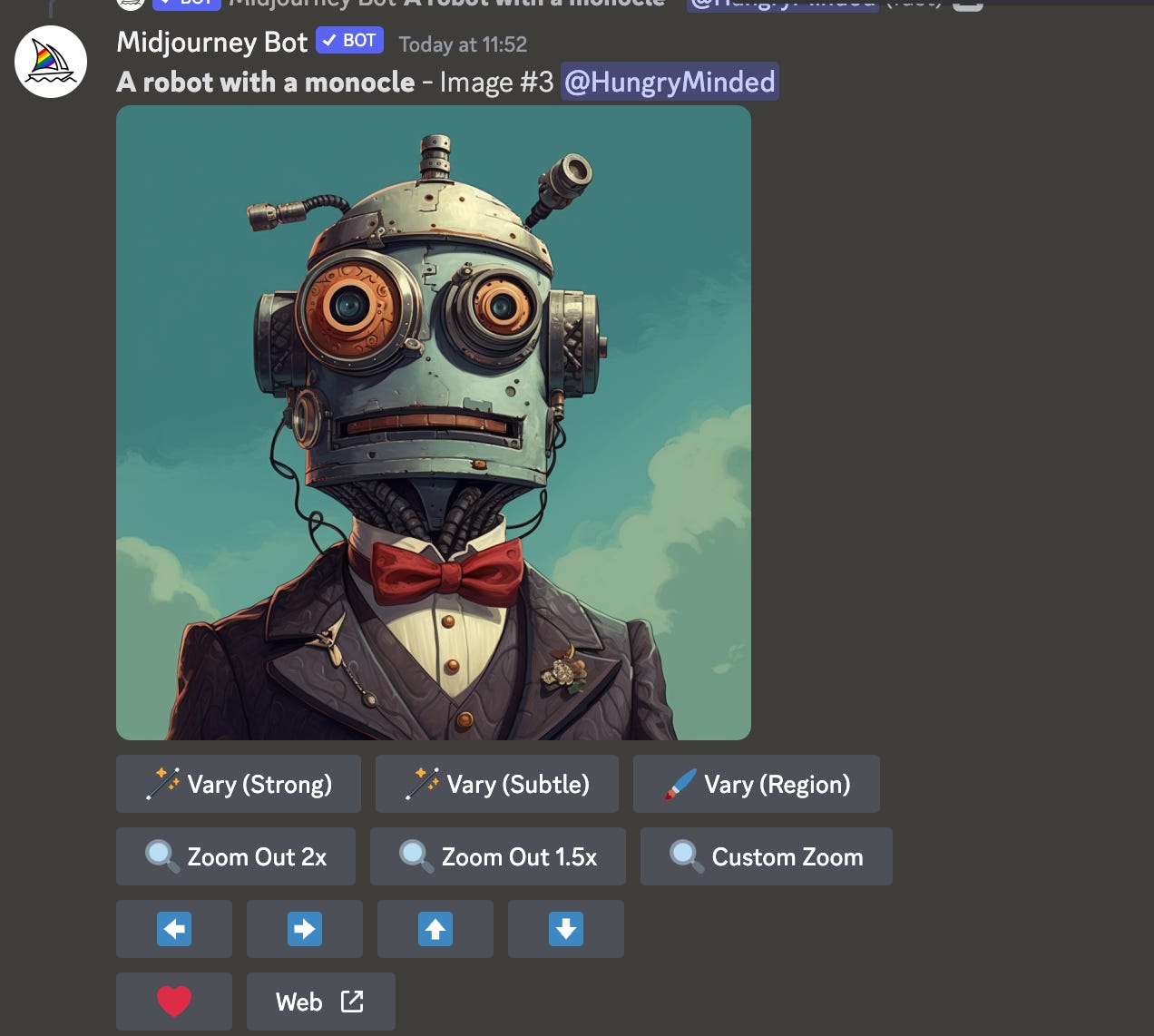

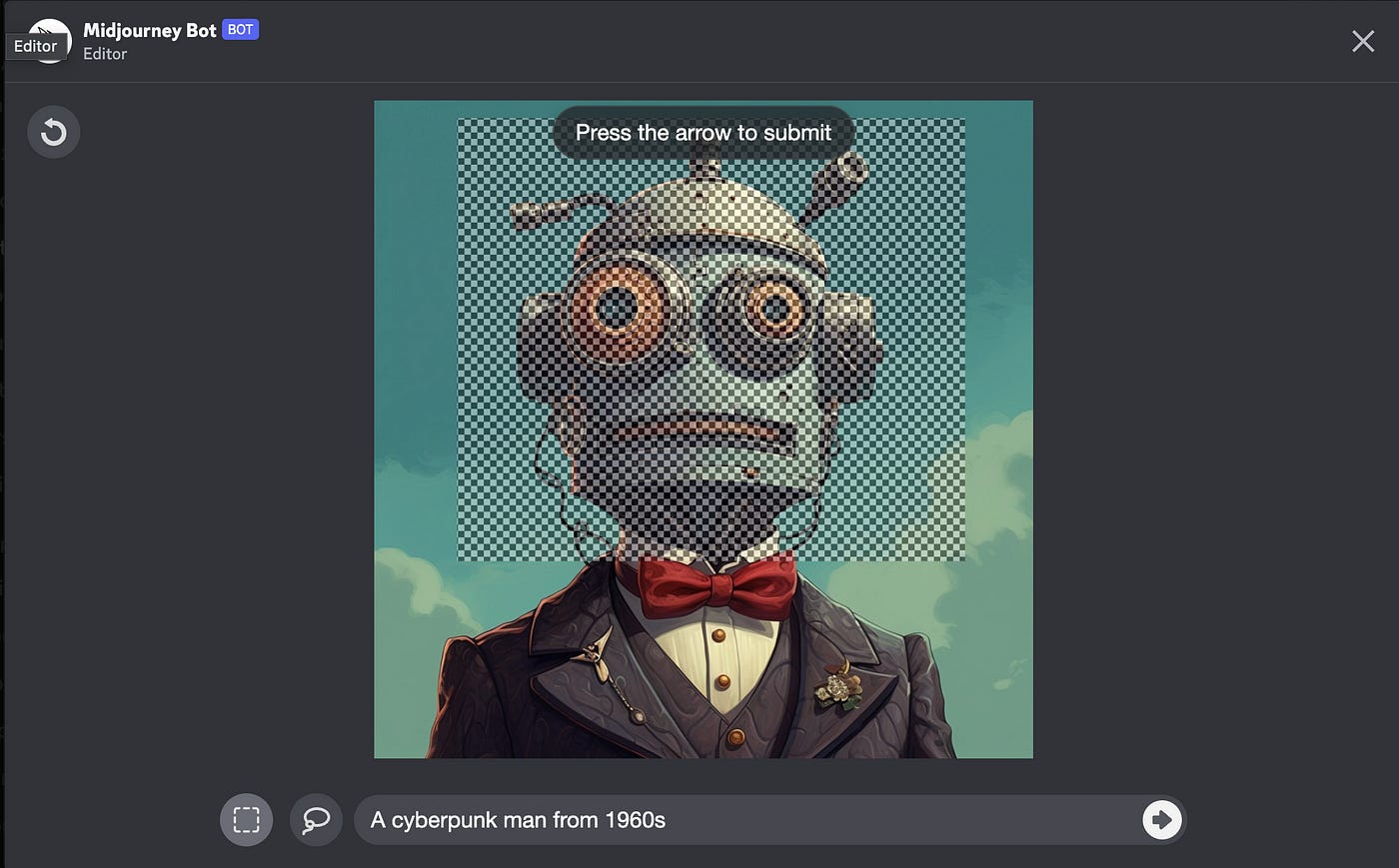

Once you press the button an editor window will pop up.

You will be able to choose a part of the image using the rectangle or lasso tools and alter the prompt to your liking.

And here’s the result:

What you can do:

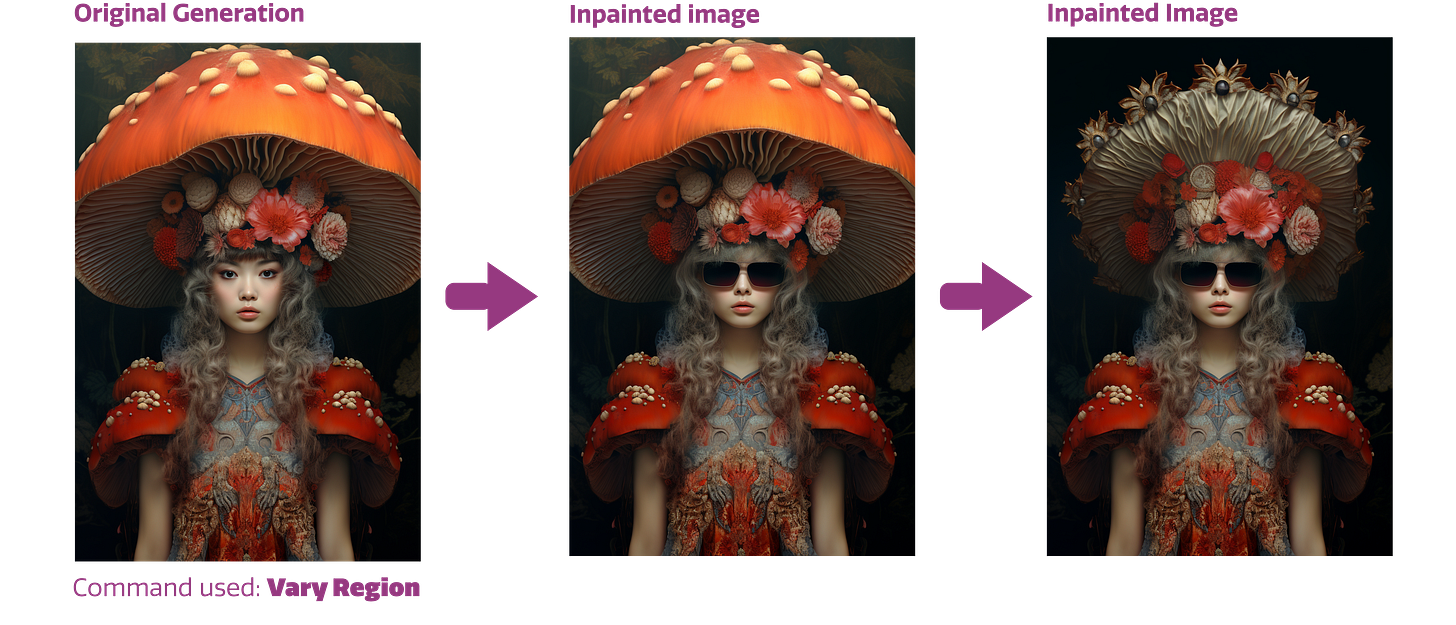

You can easily swap accessories and other small details or completely change larger parts of the image without losing what you liked about the original generation

This also allows you to create different variations of the same thing. As an example, we can now create mockups of different designs on the same backdrop:

You can easily generate different characters on the same background:

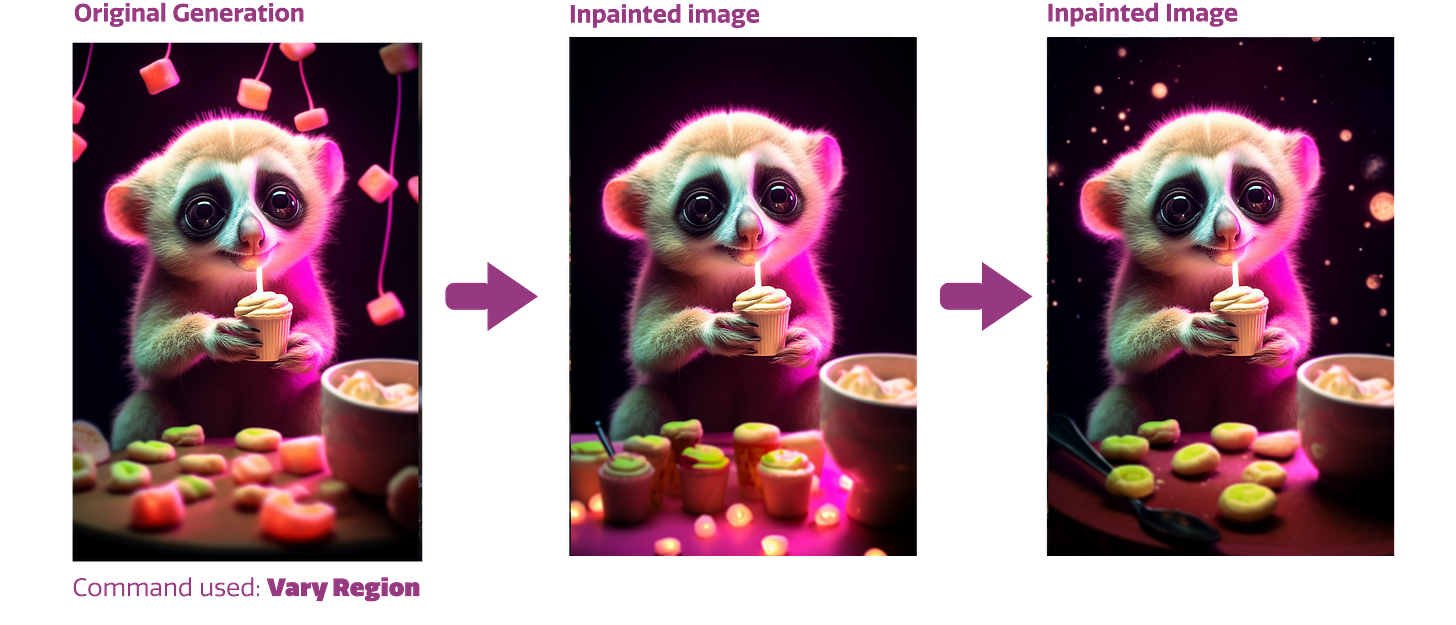

Or change the surroundings of your favorite characters

Potential use cases

Mockup design (creating custom backgrounds for various designs)

Could be useful for comics (maintaining consistency of characters or scenes)

Creating designs for products like playing cards (maintaining consistent backgrounds while changing the subjects)

News in the space

OpenAI announced DALL·E 3 and it looks like it generates text pretty well. Learn more.

Chat GPT, can now see, hear and speak. DALL·E 3 integration also coming soon. Learn more.

Midjourney News

Do you have any questions, suggestions, or anything else to say? Reply to this e-mail or leave a comment on Substack!

See you again soon! :)